How to Handle Long Prompts in ChatGPT, Claude, DeepSeek, and any other AI's

With AI tools like ChatGPT, Claude, DeepSeek, Mistral and Gemini becoming increasingly powerful, all but the most peripheral users share a common trait: prompt length. When you send a long instruction or chunk of text, you will find yourself bumping into a token limit and receiving an error or cutoff output. In this article, we will show you how to optimize handling long prompts effectively using methods like prompt splitting, streamlining formatting, and specialty tool solutions.

Why Long Prompts are Throwing Errors Across different AIs

Most AI models operate under a token limit per conversation or message. Tokens are chunks of text, not characters — even short prompts can use more tokens if they contain code, formatting, or complex structure.

- ChatGPT (OpenAI): GPT-4-turbo supports ~128k tokens, but the free version has lower limits.

- Claude (Anthropic): Claude 3 Opus supports large contexts (~200k tokens), but still has response size constraints.

- DeepSeek: Context limit varies per version; models may cut off long prompts silently.

- Gemini, Mistral, and others: Many models truncate or return errors for overly long prompts.

How to Split Long Prompts Effectively

Need help splitting prompts?

Try our free tool to break down long messages easily

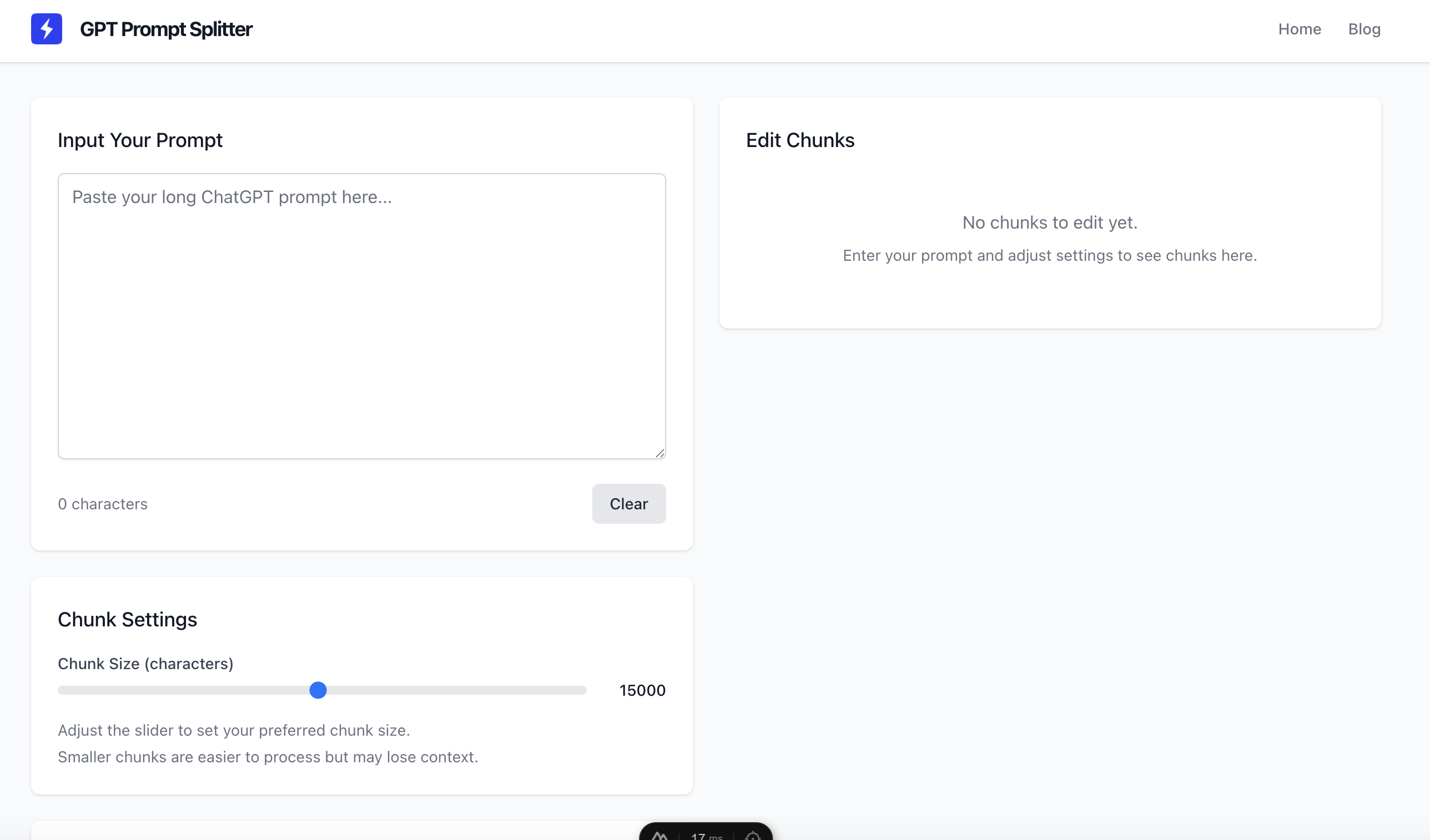

1. Use a Prompt Split Tool

Tools like GPT Prompt Splitter let you break up long text into manageable chunks that stay within token limits. This is ideal for:

- Large code files

- Multi-part instructions

- Bulk content analysis

2. Send Context in Parts

Start with a general summary, then continue with parts sequentially. For example, in ChatGPT or Claude:

- Message 1: "I will send you a research paper in 3 parts. Acknowledge when ready."

- Message 2: Part 1

- Message 3: Part 2, etc.

3. Upload Files (When Supported)

Tools like Claude and ChatGPT Pro allow you to upload documents instead of pasting long text. Use this feature for:

- Long-form documents or books

- Large JSON or CSV files

- Codebases

4. Simplify and Compress

Reduce formatting, whitespace, or unnecessary metadata before sending prompts. Avoid large code blocks in a single message.

5. Use Model-Specific Features

Some AIs offer specific instructions or system prompts for long content. For instance:

- ChatGPT: Use system messages to set roles, then break the input logically.

- Claude: Leverages document-aware context; uploads work better than paste.

- DeepSeek & Mistral: Keep prompts minimal, and link context across messages.

Final Thoughts

When working with AIs like ChatGPT, Claude, or DeepSeek, understanding how to split prompts and manage token limits is key to success. Whether you're writing a research prompt, debugging code, or feeding in multiple documents, using a prompt splitting strategy ensures your AI assistant stays responsive and accurate.

Don't let length limits hold you back — split your prompt, upload files where possible, and lean on dedicated tools to simplify the process.

Try our free solution

Use our Prompt Splitter to automatically divide and format your message